Before improving the quality of your data, you should profile your data.

To start, ensure that you have access to at least one Dataset. How do I add a Dataset?

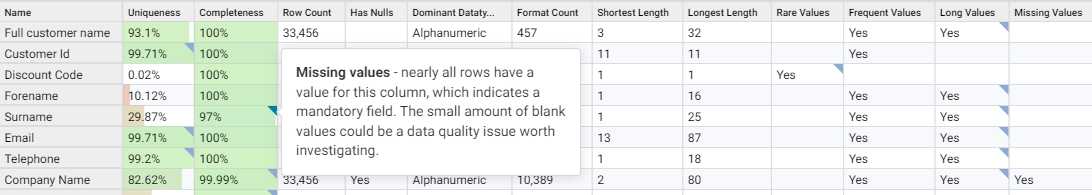

Profiling a set of data is the process of analyzing the values in each column to calculate a range of per-column attributes, such as minimum and maximum values, value and null counts, uniqueness and completeness of values.

Statistics on common formats and outlier/rare values are calculated for each column.

Any Dataset or View can be profiled, either using the Profile step in a Workflow, or in Explore mode. When profiling data in Explore mode, you can right-click a column name to drill down to that column's unique Values or Formats, and from there drill down again to the individual rows that contain that selected value or format.

| Attribute | Description |

|---|---|

| Name | The name of the column being profiled. |

| Summary | The description of the column being profiled. |

| Uniqueness | The uniqueness of the column (unique count as a percentage of row count). |

| Completeness | The completeness of the column (percentage values in the column that are non-null). |

| Row Count | The number of values in the column. |

| Has Nulls | Does the column have a null count greater than zero? |

| Dominant Datatype | The Datatype that is most common across values in this column. |

| Format Count | The number of unique format patterns for all the values in this column. |

| Shortest Length | The length of the shortest value in the column. |

| Longest Length | The length of the longest value in the column. |

| Rare Values | Whether there are unexpected infrequent values in the column. |

| Frequent Values | Whether there are unexpectedly frequent values in the column. |

| Long Values | Whether there are abnormally long values in the column. |

| Missing Values | Whether there are rare occurrences of NULLs in the column. |

Includes the core attributes as well as:

| Attribute | Description |

|---|---|

| Unique Count | The number of unique values in the column (same as the count of grouped values. Nulls are counted as a value). |

| Minimum | The minimum value in the column (first alphabetically, earliest date, lowest numeric value). |

| Maximum | The maximum value in the column (last alphabetically, latest date, highest numeric value). |

| Overall Datatype | The Datatype that would be required to store all values. If a column has both Numeric and Date datatypes, the overall type would be Alphanumeric. |

| Sum | The sum of all numeric values in this column. |

| Standard Deviation | The standard deviation of all numeric values in this Column. Non-numeric values are treated as zero. |

| Average | The average of all numeric values in this column (sum of numbers / number count). |

| Precision | The overall numeric precision for this column, which is the largest number of digits in a number including digits on both sides of the decimal point. |

| Scale | The overall numeric scale for this column which is the most number of digits to the right of the decimal point. |

| Zero Count | The number of rows in the Table containing a value in this column that is zero |

| Negative Values | The count of numeric values in this column that are less than zero |

| Least Common Value | The overall least frequently occurring value in the column. |

| Least Common Count | The count of the number of time the least common value occurs in the column. |

| Most Common Value | The overall most frequently occurring value in the column. |

| Most Common Count | The count of the number of time the most common value occurs in the column. |

| Least Common Format | The least frequently occurring format pattern in this column. |

| Least Common Format Count | The count of the number of times the least frequently occurring format pattern occurs in this column. |

| Most Common Format | The most frequently occurring format pattern in this column. |

| Most Common Format Count | The count of the number of times the most frequently occurring format pattern occurs in this column. |

| Average Length | The average lengths of all values in the column. |

| Length Deviation | The standard deviation of the lengths of all values in the column. |

| Frequency Deviation | The standard deviation of the frequency of occurrence of each value across the set of values in the column. |

| Format Frequency Deviation | The standard deviation of the frequency of occurrence of each format pattern across the set of format patterns in the column. |

| Alphanumeric Uniqueness | The uniqueness of the alphanumeric values in the column (unique alphanumeric values as a percentage of all alphanumeric values). |

| Alphanumeric Unique Count | The number of unique alphanumeric values in the column. |

| Alphanumeric Completeness | The completeness of the alphanumeric values column (values in the column that are alphanumeric as a percentage of the total row count). |

| Alphanumeric Count | The count of alphanumeric values in the column. |

| Alphanumeric Minimum | The first alphanumeric value in the column alphabetically. |

| Alphanumeric Maximum | The last alphanumeric value in the column alphabetically. |

| Number Uniqueness | The uniqueness of the numeric values in the column (unique numeric values in the column as a percentage of all numeric values). |

| Number Unique Count | The number of unique numeric values in the column. |

| Number Completeness | The completeness of the numeric values column (values in the column that are numeric as a percentage of the total row count). |

| Number Count | The count of numeric values in the column. |

| Number Minimum | The lowest numeric value in the column. |

| Number Maximum | The highest numeric value in the column. |

| Check Sum | The checksum for all the values in the column. |

| Date Uniqueness | The uniqueness of the date values in the column (unique date values in the column as a percentage of all date values). |

| Date Unique Count | The number of unique date values in the column. |

| Date Completeness | The completeness of the date values column (values in the column that are date as a percentage of the total row count). |

| Date Count | The count of date values in the column. |

| Date Minimum | The earliest date value in the column. |

| Date Maximum | The latest date value in the column. |

| Nullity | The percentage of rows in the table where the value in this column is null. |

| Null Count | The number of rows in the table containing a value in this column that is null. |

| Key Check | Whether the data in the column denotes this is a perfect key or is a key that is broken. |

| Rare Formats | Whether there are unexpected infrequent formats in the column. |

| Short Values | Whether there are abnormally short values in the column. |

| Low Amounts | Whether there are abnormally low numeric values in the column. |

| High Amounts | Whether there are abnormally high numeric values in the column. |

| Sequence | Whether the values in the column are sequential numbers. |

| Average Frequency | The average frequency of occurrence of a value across the set of values in the column. |

| Average Format Frequency | The average of frequency of occurrence of a format pattern across the set of values in the column. |

| Sum Squared | The sum of the squares of all numeric values in the column |

| Length Sum Squared | The sum of the square of lengths of all values in the column. |

| Length Sum | The sum of the lengths of all values in the column. |

| Sum Squared Of Frequency | The sum of the square of frequency of occurrence of each value in the column. |

| Sum Squared Of Format Frequency | The sum of the square of frequency of occurrence of each format pattern of values in the column. |

| Data Tags | Data tags assigned to the column. |

| Sensitive Data Tags | Data tags with sensitive flag assgined. |

Profile results are interactive in that users can click in to see a list of the Values or Formats for a chosen column. This list contains the row count and percentage distribution to understand which are the dominant items.

It is then possible to further drilldown to the specific Rows in the original underlying data that contain selected value(s) or format(s).

One aspect of Data Studio's profiling process is to generate a format pattern (and simple format pattern) for each value replacing any letter with 'A' or number with '9'. These format patterns can then be used to carry out tasks like:

The format pattern is calculated using the following rules:

@, . (period), , (comma), _ (underscore), - (hyphen), and + are unchanged.< and >) become ( and )./ (forward slash), \ (backslash), | (pipe) are /.£, €, ¥ become €.&, =, ®, ‡, %, ¬, ´ (the acute accent), become # . This applies to characters with the following Unicode category designations (unless a previous rule already handles them):=, ÷.The simple format pattern is calculated in the same way as the format pattern, except that it removes consecutive repeated format characters after the first one.

| Value | Format | Simple format |

|---|---|---|

| MNOPQ3344ZZ | AAAAA9999AA | A9A |

| SK10 2ED | AA99S9AA | A9SA |

| 1-888-397-3742 | 9-999-999-9999 | 9-9-9-9 |

| test@gmail.com | AAAA@AAAAA.AAA | A@A.A |

| ($250) | (€999) | (€9) |

| H&J Ltd. | A#ASAAA. | A#ASA. |

| {1/2}34 | (9/9)99 | (9/9)9 |

An outlier is a value that appears to deviate markedly from other members of the sample in which it occurs. For data, it is a value which has an unusual attribute or characteristic, for example:

The Outliers profile attributes in Data Studio highlight values which are very different from the average (mean or expected) value in some way:

For characteristics based on the relative sizes of a set of values, Data Studio uses a statistical calculation based on standard deviations and the average. The outliers statistics will highlight values which are at the extremes of a normal distribution curve, in other words those occurring no more than twice per thousand values.

For characteristics based on a single statistic, Data Studio uses a threshold value of rarity threshold of less than one in 1000 to determine whether something unusual is present. Records whose values are more than 3.3 standard deviations (the SD tolerance) above or below the average (mean) value are rare/high/low.

Some outlier statistics require at least two unique values to be present in the data set to ever return 'Yes'.

Profile statistics can be quickly converted into validation rulesusing the Suggest validation rules action. Selecting this action will bring up a list of suggested rules for each profiled column of data.

Once all rules and settings have been applied, a new Workflow will be automatically created containing a Validate step with the rules, which can be further edited if required.

The statistical columns included and the order they appear in can be updated. Go to Step settings > Profile step settings and configure as required.

To define your own profile statistics, click Add attribute and select a Function. The value type returned by the Function determines the statistical column options:

These calculated Profile columns can then be re-ordered/removed just like the standard Profile columns.

A pre-set created in the 'System space' will be availble to select in all Spaces.

You can set one of your Profile step settings as the Default profile preset for the Space. The default will be automatically selected when profiling data, which will be Core statistics if nothing has been set.

The User-defined default within a Space will take priority over a default set in the 'System space'.

Any Dataset or View can be profiled in two clicks by right-clicking on it (or selecting the Actions menu) in the list screen and selecting Profile. This will include all columns in the table and use the default profile preset statistics.